The notion of statistical error permeates clinical study design and analysis, forming the basis for regulatory decision-making in evidence-based medicine, where the vaunted p-value is a cornerstone of analysis. In addition, statistical error is a key factor in sample size calculations. Considering the complexity and criticality of this topic, we thought it would be helpful to provide this primer on how to control statistical error in your clinical study design.

Before we dive in, it’s important to note that p-values have become omnipresent to the extent that pushback is occurring; clinical and statistical experts alike complain that we now overuse statistical cutoffs to evaluate medical technology. These are valid concerns, but that’s a conversation for another time. For this discussion, we can safely assume that the notion of statistical error will continue to influence clinical studies for the foreseeable future. Let’s begin by discussing what the two potential types of statistical error mean in a practical sense.

Type I or Alpha (α) Error – False Positive

Type I or alpha (α) error is the incorrect conclusion that an effect exists when one does not; it can be described as a “false positive.” When a Type I error occurs, a study wrongly concludes that a device is safe and/or effective, when in fact it is not. Understandably, FDA strives to minimize Type I statistical errors to protect the public from ineffective or unsafe devices.

This type of error is caused by the play of chance, such as the role of randomness in selecting subjects or assigning interventions that result in accidentally “finding” a nonexistent effect. To clarify, it is not considered a Type I error if you find an effect in the “wrong” subjects (e.g., the control group outperformed the treatment group in a randomized design) and/or use incorrect statistical methods to reach positive conclusions.

Type I errors can be controlled through the statistical concept of, you guessed it, the p-value. If the p-value is small enough (by which we usually mean “less than 0.05” for traditional and historical reasons), it means that the effect observed in the data is unlikely to have arisen by chance, and we conclude instead that the effect is real.

Type II or Beta (β) Error – False Negative

Type II or beta (β) error is the failure to find an effect when one truly exists; it can be described as a “false negative.” This is the kind of error that hurts the sponsor because the study concludes that a device is not safe or that it is ineffective, when in fact, the device works according to expectations and a benefit “should” have been found.

This type of error arises when insufficient data were gathered to meet the statistical objectives of the study. An effect that should have been detected wasn’t, caused by either too few subjects in the dataset or too much variability in the data. The latter is a roundabout way of still saying “too few subjects,” since greater variability can be overcome with more subjects.

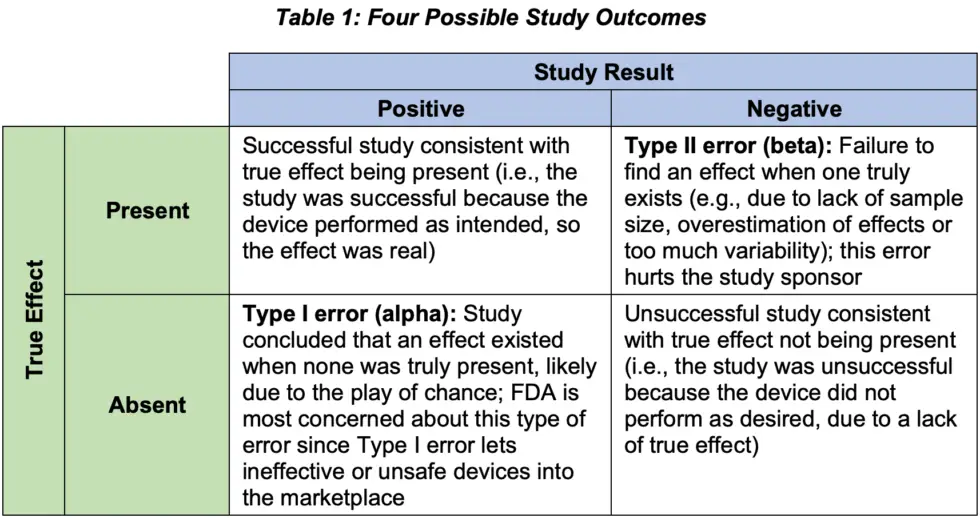

Type II errors can be controlled through sample size and power analysis. More specifically, we must ensure that a study is large enough to statistically detect the effect anticipated (i.e., show a small p-value). Table 1 summarizes the four possible outcomes for a study, illustrating the concepts we’ve discussed about Type I and II errors.

Unleash the Power

“Power” gets mentioned a lot in statistical trial design; the concept is theoretically straightforward but can be tricky to use properly. First, the definition: power is the probability that a study will meet an endpoint given the chosen design and endpoints, assumptions on effect size and variability, and planned sample size. In other words, power is the flip side of Type II error, so power equals one minus the chance of a Type II error.

Power is almost always computed before study data are collected because information about power determines how many study subjects are needed. We want 80% (maybe 90%) power, and the way to get that is to calculate a sample size that achieves this level of power.

Keep in mind that no matter how big the study, there is always some tiny chance of aberrant data, just as it is possible to flip a coin 10 times and get 10 heads in a row. For this reason, power can never be 100%. So how high is high enough? Planned power of 80% is common, though if greater confidence is desired, 90% is a stronger choice but more expensive due to the larger sample size (i.e., a higher power always requires a larger sample size).

Power is most meaningful before the study starts, since once it’s done and the data has been collected, the endpoints were either met or they were not – there are no more probabilities because the events of interest have all happened. Still, when a study fails, you may hear explanations based on “post hoc power,” which is power computed retrospectively. For example, the study report might say, “Although the a priori power was designed to be 80%, the study was ultimately underpowered for the effect size observed” or “although the endpoint was not met, we may have found a significant difference with more subjects.”

While not technically wrong, these explanations are useless since any study that fails an endpoint was underpowered post hoc, regardless of the planned (“a priori”) power. That is, the very fact that the endpoint failed means the study didn’t have enough post hoc power and vice versa, so trying to leverage power calculations after the fact is a circular argument. Instead, a smarter approach is to proactively focus on design strategy to ensure reasonable assumptions on effects and sufficient sample size for the planned power. The study may still fail, but it won’t be for lack of planning.

Statistical vs. Clinical Significance

Since the notion of statistical error is, well, statistical, it’s worth talking about the idea of “significance,” specifically that statistical and clinical significance are two different concepts.

P-values tell us nothing about the size or practical relevance of an observed benefit; they only tell us whether the benefit can be explained by chance. For example, a large study can detect small, even clinically irrelevant differences, as being statistically significant. This is not necessarily a design flaw, although designing a study to be much larger than needed is not something payers (corporations, governments, universities, research groups) normally do.

Conversely, a large clinical benefit can fail to be statistically significant if the study is small or poorly designed, because clinical significance depends on the magnitude of the effect and the importance of the outcome. Put another way, clinical significance is not a statistical issue, but rather, a matter of scientific consensus on “what a reasonable set of experts would agree.”

Conclusion

Considering the role statistical errors play in sample size determination and regulatory decision-making, it’s critical to consider the possibility for Type I or II errors when designing your study. While to err is human, finding the right balance of sample size, power, and significance will help you avoid the undesirable Type II error and increase your chances for a successful study outcome.