Welcome back to part two of our blog series on clinical trial electronic data capture (EDC) system development and maintenance. We’re glad you’re here, but we aren’t surprised. Even if you followed our tips to optimize your EDC release (see Part 1), change was in your future, and that’s a good thing for you and your study.

The EDC isn’t a one and done clinical tool. It lives, breathes, and evolves with your study. Remember, Greek philosopher Heraclitus said, “The only constant in life is change.” Our BRIGHT philosophy is, “Don’t worry, we have a process for that!” In this article, we’ll share several tips to manage change in the EDC.

Why does the EDC change?

The EDC reflects the protocol, and it is designed, tested, and released before most sites are activated and subjects are enrolled. Despite your best efforts to develop the best study protocol possible, changes, improvements, and iterations are inevitable. Maybe you discovered that the eligibility criteria were too restrictive, making it difficult to recruit and enroll subjects. Perhaps a competitive device suddenly came to market with a data-driven claim that you would also like to make, and now you need more data. Or maybe you need to add or remove data variables to support a protocol revision. Accept the inevitability of EDC change, and the process will be much more comfortable.

If you build it, they will come … and ask to change it

We all know that software apps get updated … sometimes, a lot. As EDC end users perform activities in their assigned study roles, they will no doubt share feedback to inform purposeful, iterative updates. Each update should be considered a “mini build” because robust change management requires that you cover all the same steps as in the initial build. This level of discipline will protect you and your build from doing something rash, especially something that provokes a corrective change.

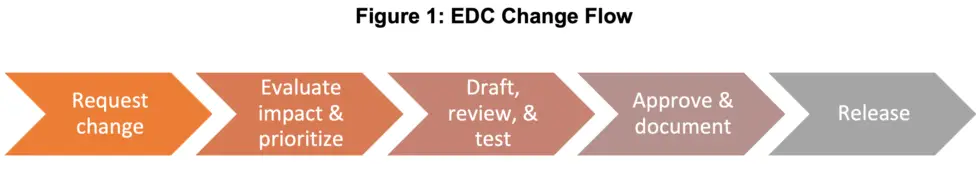

While the textbooks aren’t explicit about how ancient Greek philosophers became comfortable with new topics, it possibly involved drawing flowcharts on the ground. That’s a great starting place to illustrate EDC change flow. Therefore, behold Figure 1, a high-level approach to receiving, evaluating, and implementing EDC changes, followed by a deeper dive into each step.

1. Request a change

Any EDC user may discover an error or suggest an improvement based on their experience with the system. We recommend using a database or other collaborative tracking tool to compile and record the incoming change requests. With the right structure, you can use this tool to track status of the entire change process. It will also provide an audit trail to record who made the database change request and why, when and what actions happened, who completed the user acceptance testing (UAT), impacts on the electronic case report forms (eCRFs), association with any protocol changes, and whether any risk analysis or post-release actions were needed.

2. Evaluate impact & prioritize

To evaluate the impact of the change and to prioritize the request, start by asking a series of questions. Does the change represent a high-priority fix or a low-priority tweak? How will the EDC be impacted (both existing data and data in the future) if this change is implemented? Is the request something that could be addressed with more training rather than changing the database? Answer these questions, and more, by working with your data management team to evaluate proposed changes using a risk assessment approach.

Examples of high-priority fixes are changes that correct an inability to enter or save data, or that revolutionize the way you are capturing subject data. A low-priority tweak is more along the lines of improving the layout by moving the location of a variable on the eCRF. If the change isn’t urgent, put it on a wish list. This allows you to build a critical mass of smaller changes that can be developed, tested, reviewed, and released in bulk, which streamlines your process and saves your users from working in a constant state of flux. It also prevents you from diving headlong into random iterations, which can take time without providing much value.

3. Draft, review & test

Create a draft of the requested changes by incorporating them into case report forms (CRFs) with tracked changes or a test environment version of the EDC. Ask the change requestor to review this first draft to ensure their request was properly interpreted and that it addresses their need.

Review the proposed change with the study director and sponsor and document their input and agreement. This ensures the team agrees to the change as well as the time associated with testing and implementation. It is important for the broader team to understand the deliberate and measured way EDC updates happen – no magic wands or fairy dust here.

After the programming is complete, perform user acceptance testing. All EDC changes, modifications, or improvements must be reviewed and tested through a UAT process. That’s right, all of them … and some more than once. We track the details of the UAT for every change in the tracking tool described in step 1 above.

Similar to the role it played in the initial EDC build, the UAT process is the best way to ensure that the proposed change works as intended and only impacts the areas intended. If the change meets user expectations and addresses their needs without a new, negative impact, proceed to the next step. If not, document the failure, go back to the drawing board, and repeat UAT until the change is fully vetted.

4. Approve & document

Congratulations, you passed UAT and are one step closer to release. It’s time to loop in the study director and sponsor for one final review. Your end users are awesome, but they’re a little too close to the EDC now, and you need a higher-level view of the change and its impact. Be prepared to give these reviewers time and context so they can perform a meaningful review, and yes, be prepared to tweak the change based on their feedback.

File all final documents about the change request, the changes made, the associated UAT, and the approval in the trial master file (TMF). In our case, our single tracking tool serves this purpose and is locked prior to archive. Maintain these management records in a consistent manner so they are easily accessible for future reference and potential study audits.

5. Release the updated EDC

Break out the togas, because it’s time to party, or at least release the updated EDC that now incorporates your vetted, tested, and approved change. Remember to perform any post-release reviews (e.g., confirm all changes published as expected), send updated CRFs to the research coordinators at each site, email your monitors an overview of each change that they can in turn send to their sites, and upload any new or changed study tools (e.g., a quick reference guide) into the database.

Conclusion

When the opportunity for EDC change arises – and most EDCs do have opportunity for improvement – we encourage you to follow an established process that defines how to request, evaluate, draft, review, test, approve, and release those changes. When you embrace and roll with the changes, your EDC will serve you well throughout the study; at least, that’s our philosophy.